Abstract

Background and Aims

Reinforcement learning (RL) is a promising option for adaptive and personalized algorithms for the artificial pancreas. However, when adapting reinforcement learning algorithms to new domains where a natural reward function is not given directly, such as in the T1DM case, suitable reward functions have to be crafted by hand. This design process is susceptible to errors and is in fact a general open area of research within RL.

In this work we train a single RL agent using several different reward functions in the hybrid closed loop setting. We evaluate both generic and domain-knowledge based rewards functions.

Methods

We test eight different reward functions in-silico on the Hovorka simulator. The reinforcement learning agent is trained using Trust-Region Policy Optimization (TRPO), a policy gradient algorithm that has shown previous competitive performance controlling blood glucose level in T1DM.

Performance is measured in terms of the average reward of the algorithms as well as average time in-range, -hypo and -hyper. A total of 100 days with randomized meals and fixed seed are used for generating the test averages.

Results

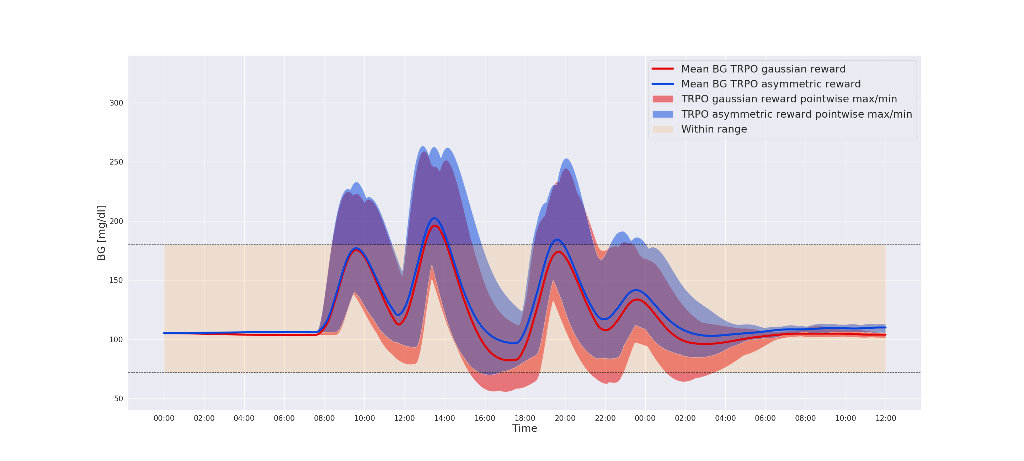

We test the algorithm on episodes lasting one and a half day containing four randomized meals and simulated carbohydrate counting errors. Figure 1 shows an example comparing two different reward functions - a Gaussian reward function and an asymmetric function designed to spend less time in hypoglycemia.

Conclusions

Our in-silico experiments shows that by tuning the reward function using domain specific knowledge of T1DM, we are able to avoid hypoglycemic events while increasing overall time-in-range.